Why study Medicine at Flinders?

Creating leaders in health, medicine and care.

From treating patients in health clinics, providing preventative-self care, influencing healthcare policy or undertaking life-changing research, the opportunities for a career in health and medicine are limitless. At Flinders, we offer fully accredited degrees such as Clinical Sciences/Doctor of Medicine, Laboratory Medicine, and Paramedicine, alongside leading programs in Medical Science and Public Health that prepare you for a wide range of health careers.

When you study at Flinders, you'll study an innovative curriculum: our Medicine degrees are developed in collaboration with industry and emphasise evidence-based practice — meaning that you'll gain future-focused knowledge and skills to meet the needs of the workforce, informed by the latest health and medical research. You'll learn from leading medical experts: our teaching staff are internationally recognised academics and researchers with years of clinical expertise.

Get hands-on training in our state-of-the-art facilities, including our clinical skills and simulation unit, research and medical laboratories, and paramedicine suites. Plus, you'll build your confidence with placement opportunities in a range of local, regional and remote environments, including the co-located Flinders Medical Centre, Royal Darwin Hospital, aged care facilities, and community care.

If you're ready to take your career to the next level, Flinders have a range of postgraduate degrees in areas such as Clinical Education, Counselling (Behavioural Health), Public Health and Remote Health Practice that will advance your knowledge or help you step up into a professional, specialist or leadership role.

Prevent, treat, cure and care at Flinders University. Explore your study options at Bedford Park, Darwin, our rural and remote campuses, and online.

- Associate Degree in Medical Science (Laboratory Medicine)

- Bachelor of Clinical Sciences, Doctor of Medicine

- Bachelor of Medical Science

- Bachelor of Medical Science (Honours)

- Bachelor of Medical Science (Laboratory Medicine)

- Bachelor of Medical Science (Laboratory Medicine)(Regional External Program)

- Bachelor of Paramedicine

- Bachelor of Paramedicine (Northern Territory)

- Bachelor of Paramedicine (Regional External Program)

- Bachelor of Public Health

- Bachelor of Public Health (Honours)

- Biotechnology

- Clinical Education

- Counselling (Behavioural Health)

- Doctor of Medicine

- Doctor of Medicine / Master of Public Health

- Graduate Certificate in Health Promotion (Online)

- Graduate Certificate in Public Health

- Graduate Certificate in Public Health (Online)

- Intensive Care Paramedicine

- Master of Clinical Epidemiology

- Master of Clinical Epidemiology (Online)

- Master of Public Health

- Master of Public Health (Online)

- Public Health

- Remote Health Practice

- Clinical Education

- Doctor of Philosophy

- Doctor of Philosophy (PhD) and Master of Business Administration (MBA)

- Doctor of Philosophy (PhD) by Prior Published Work

- Doctor of Philosophy (PhD) in Clinical

- Doctor of Philosophy (PhD) in Medical Biosciences

- Doctor of Philosophy (PhD) in Public Health and Rural and Remote Health

- Master of Clinical Epidemiology

- Master of Science

- Master of Surgery

Up to 80% off fees due to Government subsidies*

Explore a range of postgraduate Medicine courses with up to 80% off fees, from Counselling (Behavioural Health) to Clinical Education, to Clinical Epidemiology to Intensive Care Paramedicine.

*Government subsidies enable Flinders to offer domestic applicants a reduction of up to 80% off full course fees through Commonwealth Support Places. Not all courses. Offer subject to change.

STUDY FOR TOMORROW

WITHOUT MISSING TODAY.

Flexibility from

the start

Practical and constantly connected

Engaging, above-standard learning

Supported on

every level

No. 1 in Australia

in Medicine for full-time employment.

(The Good Universities Guide 2024 (postgraduate)).

No. 1 in SA

in Medicine for learner engagement, learning resources, overall educational experience, student support and teaching quality.

(The Good Universities Guide 2025 (undergraduate), public SA-founded universities only)

No. 2 in Australia

in Medicine for student support.

(The Good Universities Guide 2024 (undergraduate))

Start your life-changing career in Medicine.

Watch a webinar to explore medicine at Flinders. Learn more about career pathways, study options, topics, placements opportunities and more.

Become a leader in health, medicine and care. Discover pathways to Medicine.

Fascinated by how the body works? Start your career in Medical Science.

Be the first on the scene at the frontline of healthcare. Explore Paramedicine.

Gain two qualifications in just 1.5 years of extra study with a combined degree.

Flinders combined degree options can help you pursue multiple passions and graduate with two degrees to broaden your career opportunities.

Gain two qualifications in just 1.5 years of extra study with a combined degree.

Flinders combined degree options can help you pursue multiple passions and graduate with two degrees to broaden your career opportunities.

Northern Territory Medical Program (NTMP)

Flinders’ four-year graduate entry Doctor of Medicine (MD) is offered entirely in the Northern Territory (NT) as a collaboration between Flinders, the NT Government Department of Health and the Australian Government Department of Health through the RHMT. The NTMP provides opportunities for Territory residents to study in the NT and prioritises residents, and Aboriginal and Torres Strait Islander applicants from inside and outside the NT.

South Australia Rural Medical (SARM) Program

The SARM program has been designed for aspiring rural medical professionals, offering all four years of the postgraduate Doctor of Medicine (MD) program in rural South Australia. The program prioritises residents of rural South Australia, Aboriginal and/or Torres Strait Islander applicants and applicants from interstate with rural backgrounds.

Indigenous Entry Stream (IES)

Flinders offers Aboriginal and/or Torres Strait Islander applicants a pathway to the Doctor of Medicine via the Indigenous Entry Stream (IES). Successful IES applicants undertake a two-part program: Preparation for Medicine Program (PMP) and Flinders University Extended Learning in Science (FUELS). The IES provides applicants with an opportunity to facilitate relevant knowledge and skills development to assist with successful transitioning to the medical program.

Pathways to Medicine

Students studying at Flinders University can take advantage of some flexible pathways for entry to Medicine, including:

Via the Bachelor of Clinical Sciences, Doctor of Medicine. In this stream, students can choose either a health sciences or medical science focus in the clinical sciences degree, and then may continue through to the four year postgraduate medical program.

Flinders has more opportunities for students to study graduate entry Medicine. Up to 70% of places are reserved for Flinders graduates.

Specifically, up to 30% of places are reserved for students completing the Bachelor of Medical Science, Bachelor of Paramedicine and the Bachelor of Public Health.

Indigenous applicants may apply via the Indigenous Entry Stream (IES).

If you have an undergraduate degree but do not have a valid GAMSAT score, you can apply for entry into the IES. If you have a valid GAMSAT score you can apply for direct entry through the Aboriginal and/or Torres Strait Islander Sub-Quota or for entry into the Indigenous Entry Stream.

Applicants with a rural background may be eligible to apply for the Doctor of Medicine through the Rural sub-quota.

Become an expert. Be fearless, continue your professional development with Flinders postgraduate health courses.

Further your career in health by expanding your skills in the health field. Learn today for practice tomorrow. Fit your study around work commitments via our online delivery, full-time and part-time study options, as well as weekend intensives for some courses.

Take your medical career to the next level with a combined Doctor of Medicine / Master of Public Health (MD-MPH)

This combined program is for students who wish to combine future medical practice with work that prevents disease and improves the health of communities through implementation of education programs, development of public health policies and conducting research.

Visiting Student Elective program

Partnering with Local, Rural and Regional health networks, Flinders University offers a Visiting Medical Elective program for external medical students to undertake up to 6 weeks of placement in the disciplines of Medicine, Surgery, Psychiatry, Obstetrics & Gynaecology and Rehabilitation.

Fast facts

- Pathways to medicine available to undergraduates and graduates

- Indigenous Entry Stream available

- Curriculum adopted by other medical schools in Australia and around the world

- Unique clinical education options in metro, rural and remote Australia, and elective options almost anywhere in the world

- Study in metropolitan Adelaide or the NT

- Hands-on clinical skills development from first week of the Doctor of Medicine

- Embedded in health services across Australia, including co-location with Flinders Medical Centre at Bedford Park

- Students engage directly with local communities, instilling the values of social accountability and responsibility

Get inspired

Start your career in Medicine

Hear from Michael Hood about his student experience at Flinders and some of the highlights of his degree.

Start your career in Medical Science

Are you fascinated by how the body works? Have you ever wondered about neuroscience? Or maybe you can see yourself as a human molecular geneticist?

Start your career in Paramedicine

Ambulance Officers and Paramedics are growth occupations with projections reporting an increase in employment of up to 20% over the next 5 years.*

*Labour Market Information Portal, Department of Jobs and Small Business

Start your career in Public Health

If you are passionate about having those uncomfortable and difficult conversations, or interested in global health challenges of today, there has never been a better time to study public health.

Fast-track your medicine degree with direct entry for school leavers

While most medicine degrees around Australia are graduate entry only, you can step straight into Medicine at Flinders

School leavers can complete a medical degree in six years, developing hands-on experience from the first week. As leaders in educating future doctors and medical specialists, our curriculum has been adopted by other medical schools in Australia and around the world. Co-located with Flinders Medical Centre in Adelaide, you have the opportunity to undertake authentic, patient-centred clinical experiences from early on in the degree. Choose to study in Darwin, or one of our many rural hubs.

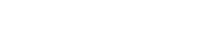

Our commitment to Indigenous health

We recognise and value the contribution that Indigenous knowledge and Indigenous doctors make to the health of our country

Our Indigenous and non-Indigenous academic staff will lead you to a greater understanding of this heritage, and teach you how to work safely and confidently in a multicultural society. You’ll have the opportunity to undertake placements in Indigenous communities and you’ll help to improve the health of our First Nations people. We also have an Indigenous entry stream, which provides greater opportunities for Indigenous Australians to study medicine at Flinders.

Seek out placements around the world

Experience a variety of health settings.

Electives and placements can be undertaken almost anywhere in the world. You can put theory into practice in metropolitan Adelaide, rural South Australia, remote communities in the Northern Territory, or international locations such as China, India, Philippines, Nepal, Sri Lanka, Thailand, Germany, Canada, the US and UK. Prepare yourself for global influence in a rapidly-changing health industry, as well as improving the wellbeing of individuals. With all the opportunities on offer, you will experience a diverse array of patients and environments.

I am an

International Student

Australia or New Zealand.

I am a

Domestic Student

I'm an Australian Permanent Resident

(including Humanitarian Visa holders).

![]()

Sturt Rd, Bedford Park

South Australia 5042

South Australia | Northern Territory

Global | Online

CRICOS Provider: 00114A TEQSA Provider ID: PRV12097 TEQSA category: Australian University